PCDM uses pretrained Latent Diffusion Models (LDM) to do

Zero-shot No-Reference Image Quality Assessment (NR-IQA).

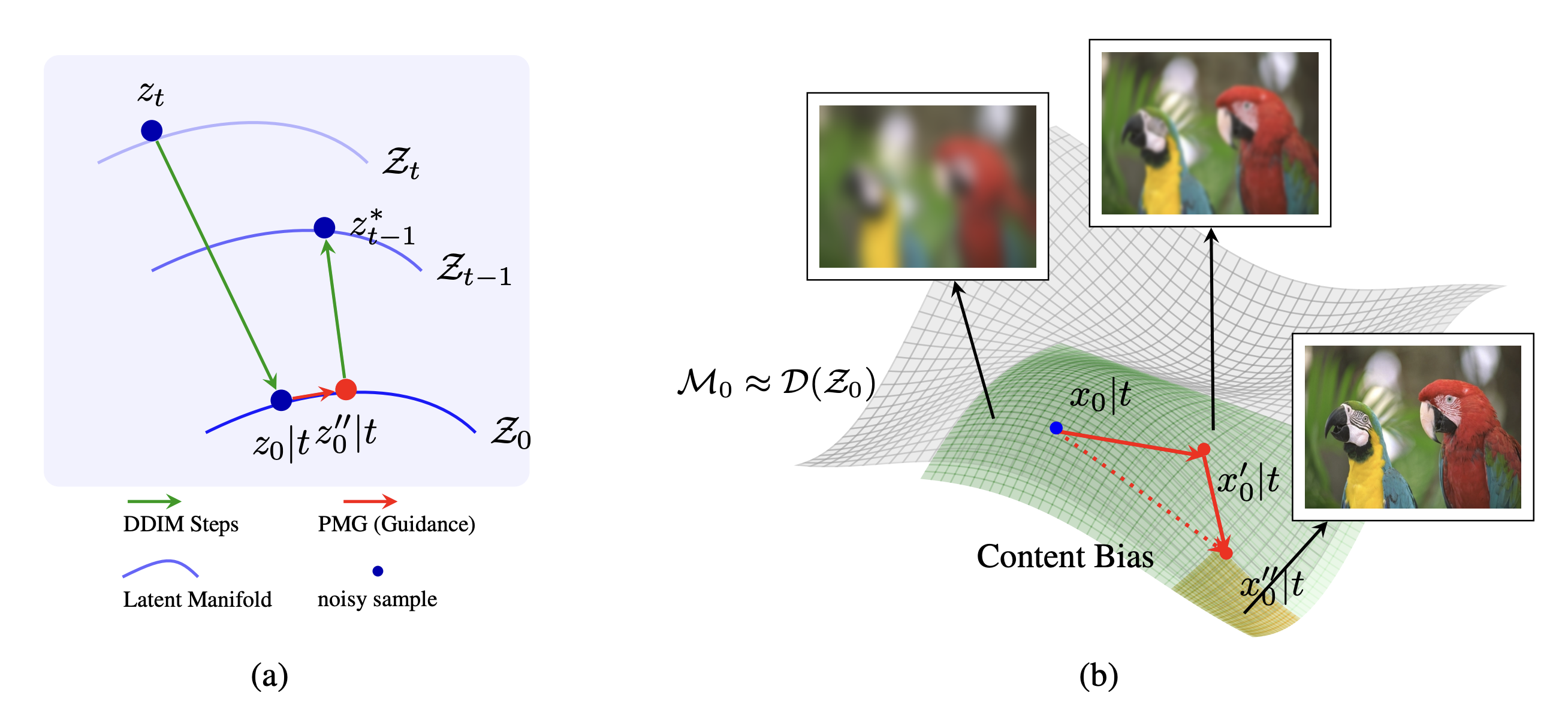

An overview of our proposed approach: (a) shows the transition of latent samples across latent manifolds, highlighting the steps of DDIM and Perceptual Manifold Guidance. (b) depicts the content bias (green) on the manifold $ \mathcal{M}_0 \approx \mathcal{D}(\mathcal{Z}_0) $ showing that the guidance term in red (PMG) pushes a data sample $ x′_{0|t} \sim \mathcal{D}(z′_{0|t})$ towards the perceptually consistent region (yellow) of the manifold.

Abstract

Despite recent advancements in latent diffusion models that generate highdimensional image data and perform various downstream tasks, there has been little exploration into perceptual consistency within these models on the task of No-Reference Image Quality Assessment (NR-IQA). In this paper, we hypothesize that latent diffusion models implicitly exhibit perceptually consistent local regions within the data manifold. We leverage this insight to guide on-manifold sampling using perceptual features and input measurements. Specifically, we propose Perceptual Manifold Guidance (PMG), an algorithm that utilizes pretrained latent diffusion models and perceptual quality metrics to obtain perceptually consistent multi-scale and multi-timestep feature maps from the denoising U-Net. We empirically demonstrate that these hyperfeatures exhibit high correlation with human perception in IQA tasks. Our method can be applied to any existing pretrained latent diffusion model and is straightforward to integrate. To the best of our knowledge, this paper is the first work to explore Perceptual Consistency in Diffusion Models (PCDM) and apply it to the NR-IQA problem in a zero-shot setting. Extensive experiments on IQA datasets show that our method, PCDM, achieves state-of-the-art performance, underscoring the superior zero-shot generalization capabilities of diffusion models for NR-IQA tasks.

Proposed Method

(a)

(b)

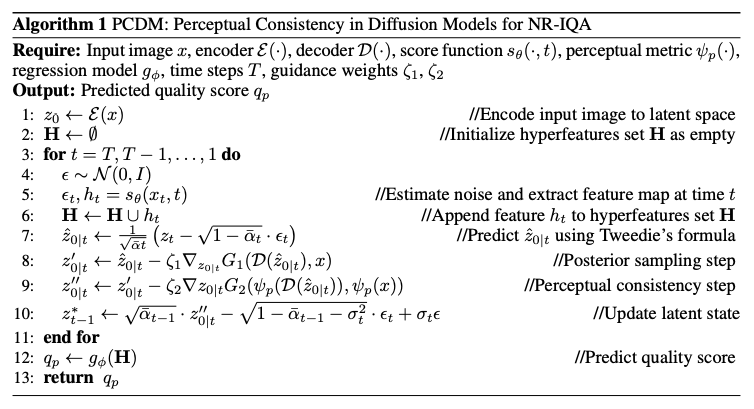

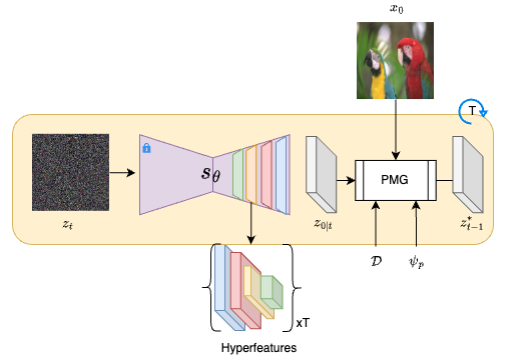

We introduce the Perceptually Consistent Diffusion Model (PCDM) for No-Reference Image Quality Assessment (NR-IQA), leveraging the robust representation capabilities of pretrained latent diffusion models (LDMs). Our method employs Perceptual Manifold Guidance (PMG) to direct the diffusion sampling process toward perceptually consistent regions on the data manifold, enhancing the model's sensitivity to variations in image quality. Furthermore, we extract multi-scale and multi-timestep features—termed diffusion hyperfeatures—from the denoising U-Net within the LDM. These hyperfeatures provide a rich and detailed representation crucial for accurate quality assessment. As illustrated in Algorithm (a), the overall procedure of our PCDM involves guiding the sampling process and extracting hyperfeatures for quality prediction. Figure (b) demonstrates the hyperfeature extraction step, highlighting how features are aggregated across different scales and timesteps. To our knowledge, this is the first work to utilize pretrained LDMs for NR-IQA in a zero-shot manner, offering a novel approach that sets the stage for future research in this domain.

Results

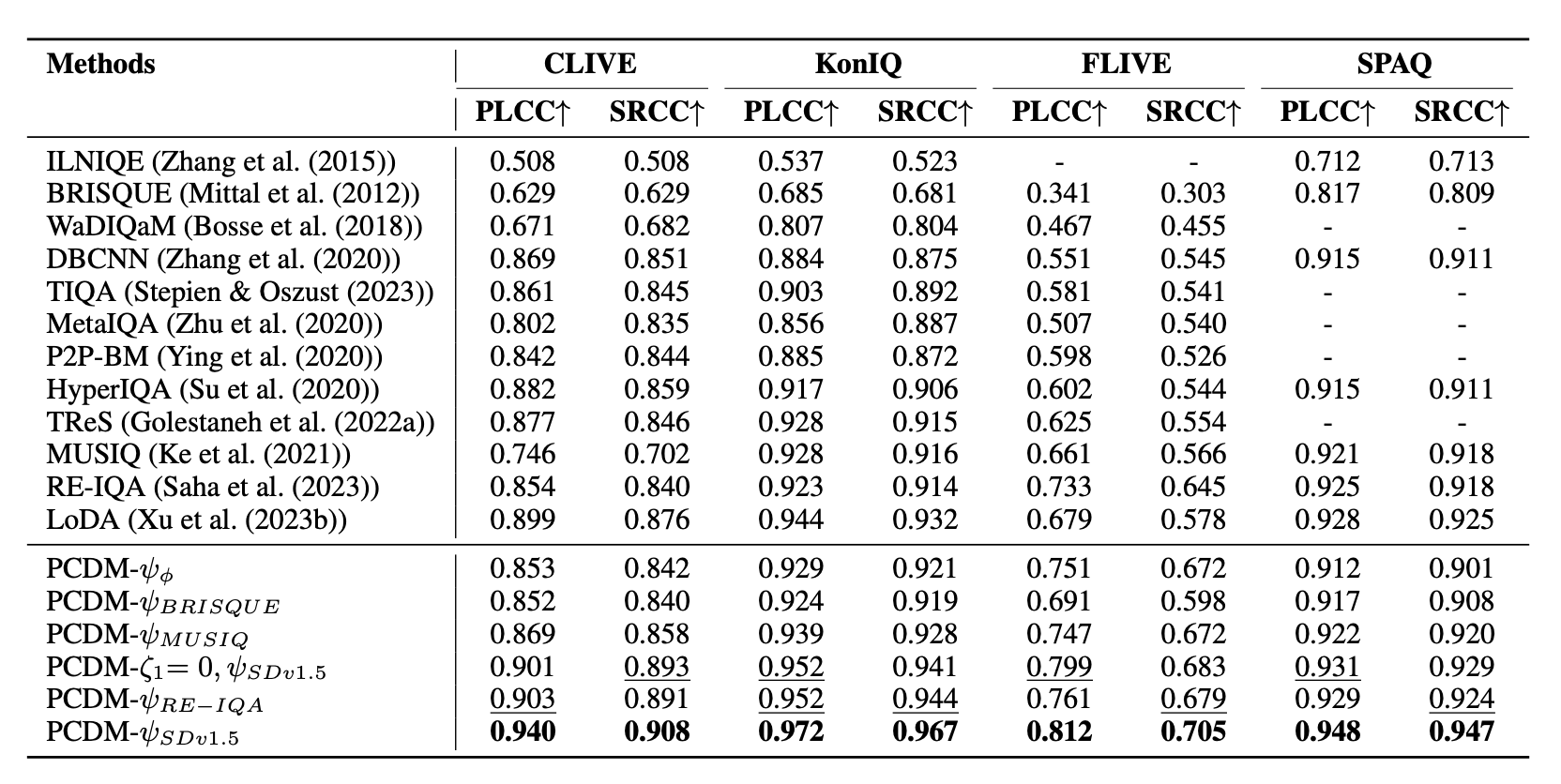

1. Authetic Distortion

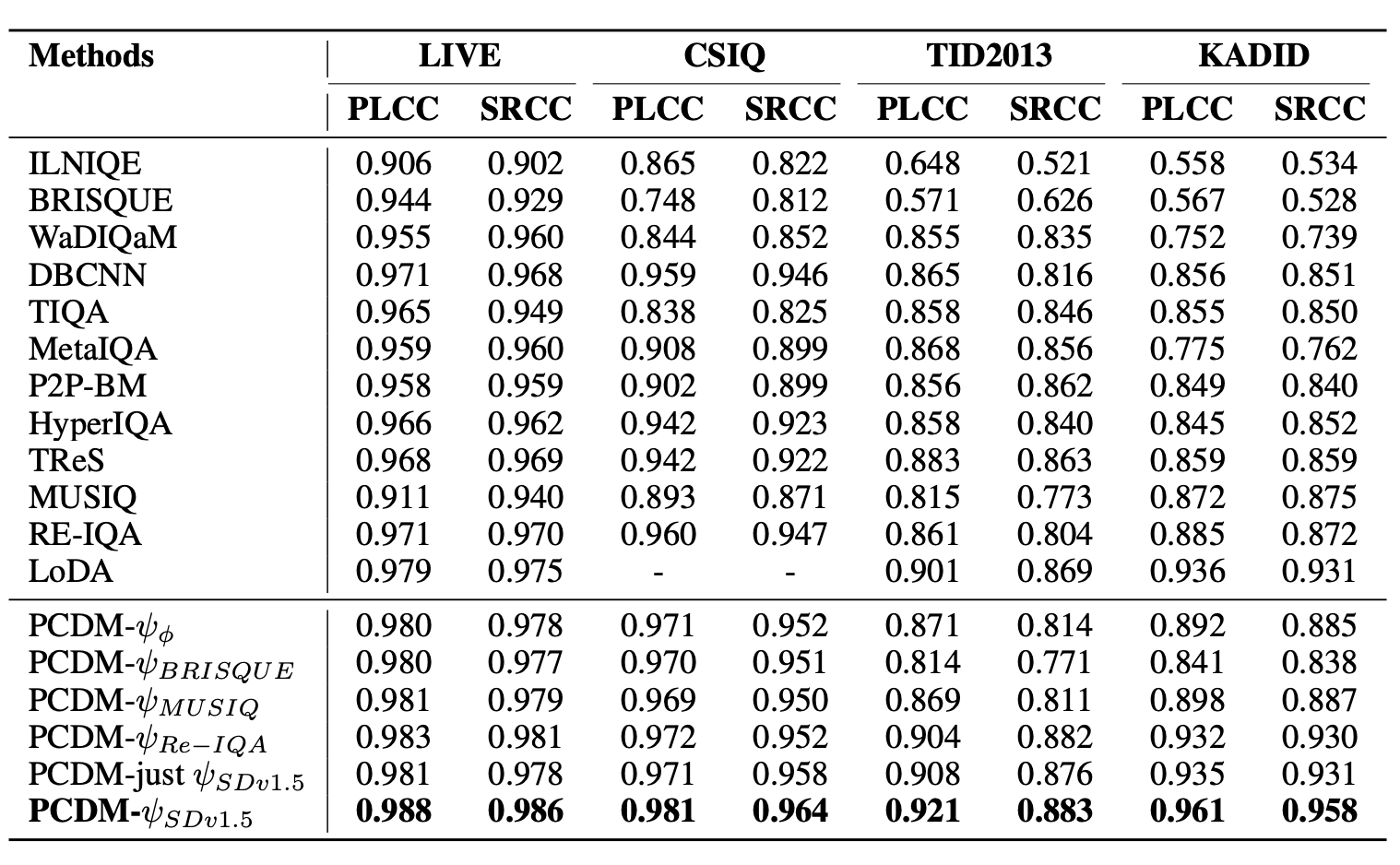

Comparison of our proposed PCDM with SOTA NR-IQA methods on PLCC and SRCC Scores for authentic IQA datasets.

2. Synthetic Distortion

Comparison of our proposed PCDM with SOTA NR-IQA methods on PLCC and SRCC Scores for synthetic IQA datasets.

3. AIGC

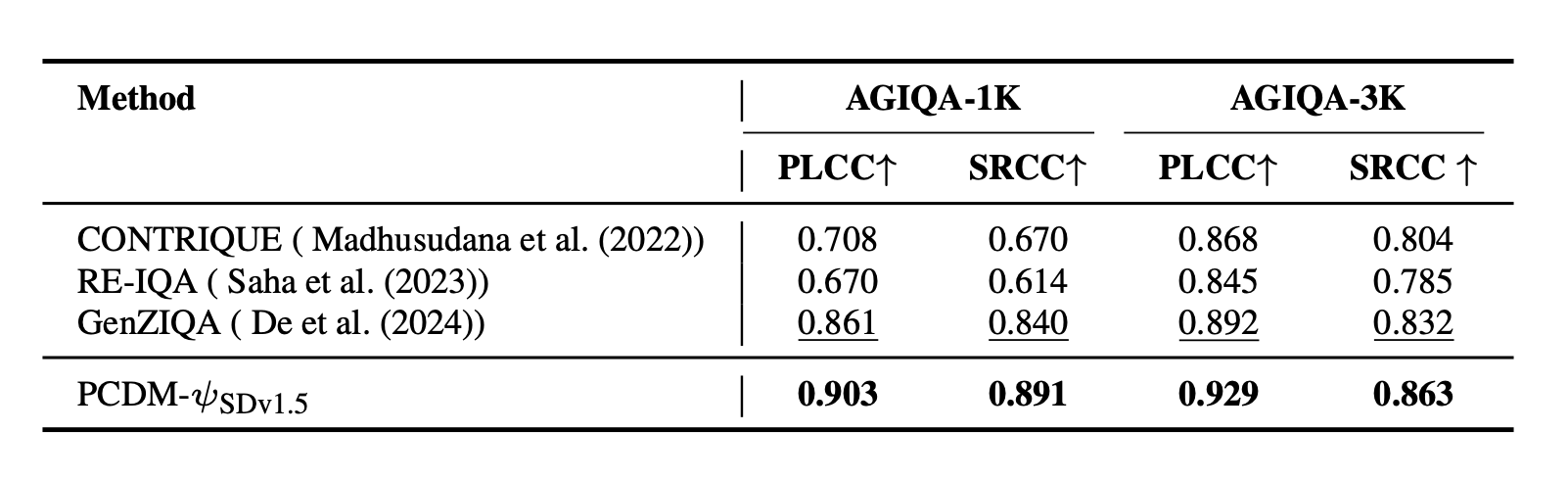

PLCC and SRCC comparison of PCDM on AI Generated Datasets for IQA.